Close your eyes and imagine the night sky filled with billions of stars, galaxies, stellar clusters and asteroids. Incredible, right? Over the next decade, those celestial images will be captured through the Legacy Survey of Space and Time (LSST) at the Vera C. Rubin Observatory in Chile.

The University of Washington is one of the four founders of the LSST Project, which will be the most ambitious and comprehensive optical astronomy survey ever undertaken. And faculty and researchers from the University of Washington DiRAC Institute will play leading roles in developing its science capabilities and data processing pipelines.

Carnegie Mellon University and the University of Washington have announced an expansive, multi-year collaboration to create new software platforms to analyze large astronomical datasets generated by the upcoming Legacy Survey of Space and Time (LSST), which will be carried out by the Vera C. Rubin Observatory in northern Chile. The open-source platforms are part of the new LSST Interdisciplinary Network for Collaboration and Computing (LINCC) and will fundamentally change how scientists use modern computational methods to make sense of big data.

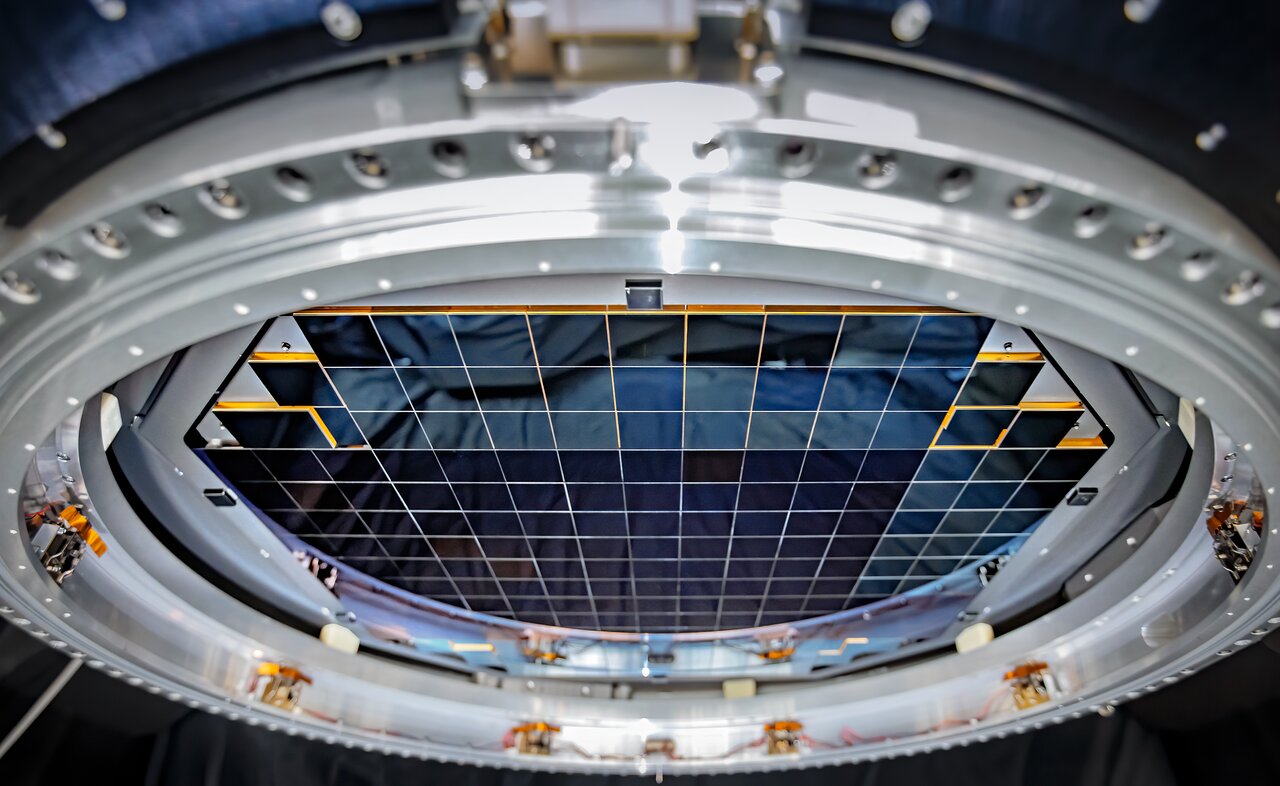

Through the LSST, the Rubin Observatory, a joint initiative of the National Science Foundation and the Department of Energy, will collect and process more than 20 terabytes of data each night — and up to 10 petabytes each year for 10 years — and will build detailed composite images of the southern sky. Over its expected decade of observations, astrophysicists estimate the Department of Energy’s LSST Camera will detect and capture images of an estimated 30 billion stars, galaxies, stellar clusters and asteroids. Each point in the sky will be visited around 1,000 times over the survey’s 10 years, providing researchers with valuable time series data.

Scientists plan to use this data to address fundamental questions about our universe, such as the formation of our solar system, the course of near-Earth asteroids, the birth and death of stars, the nature of dark matter and dark energy, the universe’s murky early years and its ultimate fate, among other things.

“Our goal is to maximize the scientific output and societal impact of Rubin LSST, and these analysis tools will go a huge way toward doing just that,” said Jeno Sokoloski, director for science at the LSST Corporation. “They will be freely available to all researchers, students, teachers and members of the general public.”

The Rubin Observatory will produce an unprecedented data set through the LSST. To take advantage of this opportunity, the LSST Corporation created the LSST Interdisciplinary Network for Collaboration and Computing (LINCC), whose launch was announced August 9 at the Rubin Observatory Project & Community Workshop. One of LINCC’s primary goals is to create new and improved analysis infrastructure that can accommodate the data’s scale and complexity that will result in meaningful and useful pipelines of discovery for LSST data.

“Many of the LSST’s science objectives share common traits and computational challenges. If we develop our algorithms and analysis frameworks with forethought, we can use them to enable many of the survey’s core science objectives,” said Rachel Mandelbaum, professor of physics and member of the McWilliams Center for Cosmology at Carnegie Mellon.

The LINCC analysis platforms are supported by Schmidt Futures, a philanthropic initiative founded by Eric and Wendy Schmidt that bets early on exceptional people making the world better. This project is part of Schmidt Futures’ work in astrophysics, which aims to accelerate our knowledge about the universe by supporting the development of software and hardware platforms to facilitate research across the field of astronomy.

“Many years ago, the Schmidt family provided one of the first grants to advance the original design of the Vera C. Rubin Observatory. We believe this telescope is one of the most important and eagerly awaited instruments in astrophysics in this decade. By developing platforms to analyze the astronomical datasets captured by the LSST, Carnegie Mellon University and the University of Washington are transforming what is possible in the field of astronomy,” said Stuart Feldman, chief scientist at Schmidt Futures.

“Tools that utilize the power of cloud computing will allow any researcher to search and analyze data at the scale of the LSST, not just speeding up the rate at which we make discoveries but changing the scientific questions that we can ask,” said Andrew Connolly, a professor of astronomy, director of the eScience Instituteand former director of the Data Intensive Research in Astrophysics and Cosmology (DiRAC) Institute at the University of Washington.

Connolly and Carnegie Mellon’s Mandelbaum will co-lead the project, which will consist of programmers and scientists based at Carnegie Mellon and the University of Washington, who will create platforms using professional software engineering practices and tools. Specifically, they will create a “cloud-first” system that also supports high-performance computing (HPC) systems in partnership with the Pittsburgh Supercomputing Center (PSC), a joint effort of Carnegie Mellon and the University of Pittsburgh, and the National Science Foundation’s NOIRLab. LSSTC will run programs to engage the LSST Science Collaborations and broader science community in the design, testing and use of the new tools.

“The software funded by this gift will magnify the scientific return on the public investment by the National Science Foundation and the Department of Energy to build and operate Rubin Observatory’s revolutionary telescope, camera and data systems,” said Adam Bolton, director of the Community Science and Data Center (CSDC) at NSF’s NOIRLab. CSDC will collaborate with LINCC scientists and engineers to make the LINCC framework accessible to the broader astronomical community.

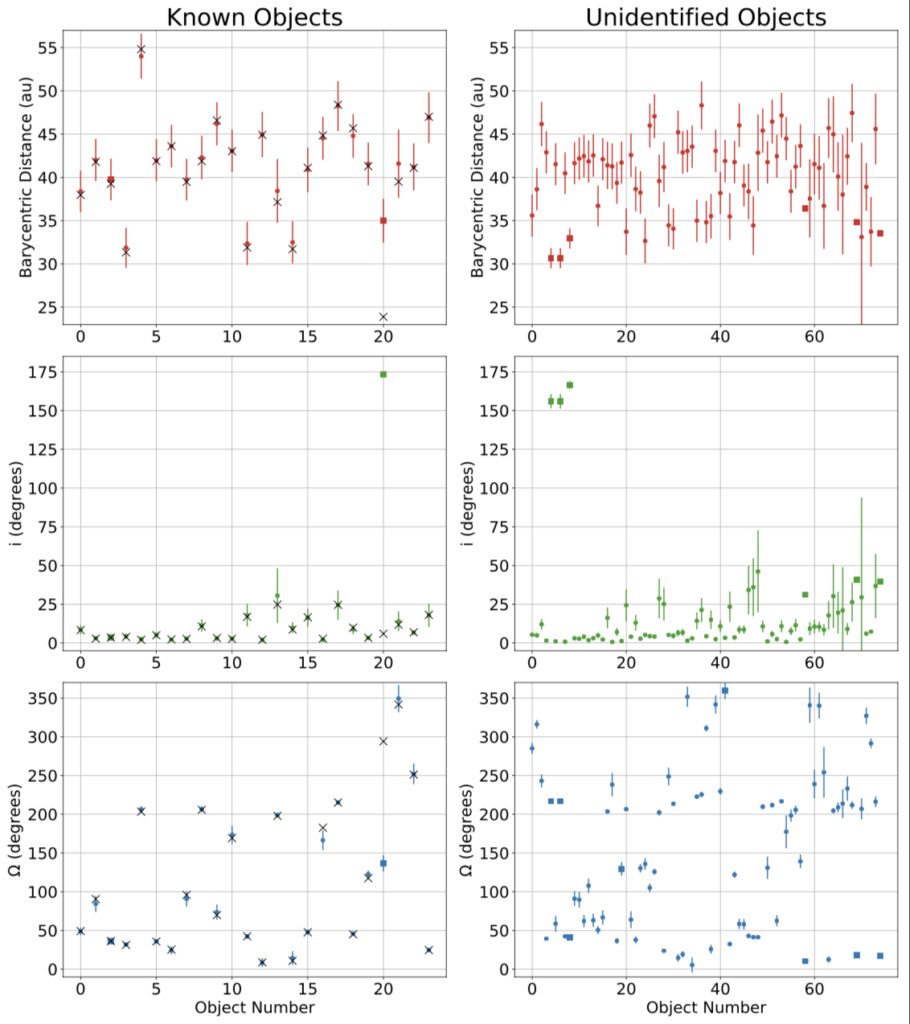

Through this new project, new algorithms and processing pipelines developed at LINCC will be able to be used across fields within astrophysics and cosmology to sift through false signals, filter out noise in the data and flag potentially important objects for follow-up observations. The tools developed by LINCC will support a “census of our solar system” that will chart the courses of asteroids; help researchers to understand how the universe changes with time; and build a 3D view of the universe’s history.

“The Pittsburgh Supercomputing Center is very excited to continue to support data-intensive astrophysics research being done by scientists worldwide. The work will set the stage for the forefront of computational infrastructure by providing the community with tools and frameworks to handle the massive amount of data coming off of the next generation of telescopes,” said Shawn Brown, director of the PSC.

Northwestern University and the University of Arizona, in addition to Carnegie Mellon and the University of Washington, are hub sites for LINCC. The University of Pittsburgh will partner with the Carnegie Mellon hub.